The Hidden Risks of AI in Medical Diagnosis: What You Need to Know

Published: 23 May 2025

Can you trust a machine to tell you if you are sick? AI in healthcare sounds exciting. It’s fast and it helps the doctors. It can even find diseases early. But the problem is, it’s not always right.

AI tools miss key symptoms. Sometimes, they don’t work well for women, children or people with darker skin. In other cases, doctors may rely too much on AI and skip important checks. That can lead to misdiagnosis, delayed treatment or even wrong decisions.

You deserve to know what’s going on behind the screen. Today, we are going to break down the hidden risks of using AI in medical diagnosis. Let’s get started.

What Is AI in Medical Diagnosis?

Artificial intelligence is not just science fiction. In healthcare, it’s already being used to help doctors find out what’s wrong with patients faster and more accurately.

But, how does it work?

AI learns from huge amounts of medical data like lab results, X-rays, patient records and even doctor notes. It finds patterns in that data that a human might miss. Then, it uses those patterns to make guesses or decisions like what disease a patient might have.

Doctors use AI to:

- Detect cancers in scans

- Spot signs of stroke or heart issues

- Predict which patients might get worse

- Help to read test results more quickly

It all sounds great and in many cases, it is.

But here’s where things get tricky. AI is only as smart as the data it’s trained on. If that data is missing, biased or flawed then the results can be wrong too. That’s why understanding the risks is so important.

Example

Imagine this: A patient gets a chest X-ray. Instead of waiting hours for a doctor to review, an AI tool scans it in seconds and spots early signs of pneumonia and alerts the doctor right away. Fast and helpful, right?

That’s the power of AI.

But this same tool might miss the disease if the patient is a child or someone with different symptoms than the data it was trained on. That’s where things can go wrong.

Why Understanding the Risks Matters

AI can be exciting. It helps the doctors to make faster decisions and can even save lives. But if we don’t look closely, we might miss the problems hiding underneath.

Let’s be honest, technology can make mistakes. We have seen phones freeze, apps crash or GPS send us the wrong way. Now imagine if a tool like that was used to diagnose cancer or heart disease.

That’s a serious risk.

What Can Go Wrong?

- AI might miss signs of illness if it didn’t learn from enough patient data.

- It may not work well for certain groups like women, children or people with different skin tones.

- Doctors might trust the AI too much and skip their own checks.

- Some tools don’t explain how they made a decision, so it’s hard to know if they are right or wrong.

These are not just small bugs. These are problems that can affect real lives.

Real-Life Impact

Think of someone going to the hospital. They get an AI-powered scan. The AI says “You are fine,” so the doctor sends them home. But the patient was actually in danger and now it’s too late.

That’s why it’s important to know the risks. Not to fear AI but to use it wisely.

Main Risks of AI in Medical Diagnosis

AI is powerful but it’s not perfect. Let’s dive deeper into the main risks that can affect how AI works in medical diagnosis. Understanding these helps patients and doctors use AI more safely.

Bias in Data

AI learns from the data it is given. If this data mostly comes from one group of people, the AI might not work well for others. This is called bias. Bias means the AI could make wrong or unfair decisions. In healthcare, this can lead to some patients being misdiagnosed or ignored.

Key points about bias:

- AI may perform poorly on underrepresented groups.

- Data often lacks diversity in age, gender or race.

- Bias can cause wrong diagnoses for minorities.

- AI trained on limited data may miss rare conditions.

- Fixing bias requires better and more inclusive data.

Example:

An AI system trained mostly on white patients missed early signs of skin cancer in people with darker skin. This delayed treatment for those patients.

Misdiagnosis or Inaccuracy

AI can make mistakes just like humans do. It might give the wrong diagnosis or miss important signs of disease. These errors happen when AI is trained on incomplete or low-quality data. Inaccuracy can harm patients if doctors rely too much on AI without double checking.

Key points about misdiagnosis:

- AI depends on the quality of training data.

- Poor data leads to wrong or missed diagnoses.

- AI might confuse one disease for another.

- Errors can delay treatment or cause wrong treatment.

- Always confirm AI results with a doctor.

Example:

A smartphone app that checks skin reports to spot problems, sometimes said dangerous moles were safe because it didn’t have enough data on rare cancers.

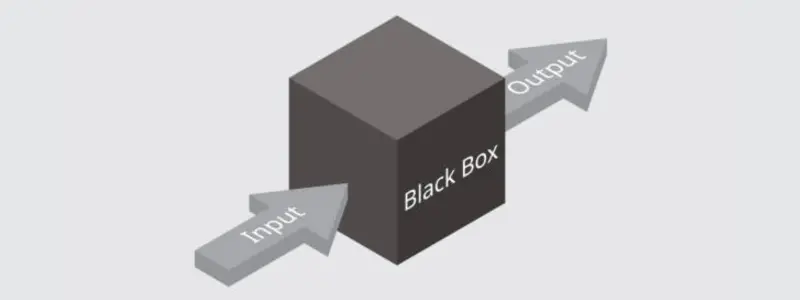

Black Box Problem

Many AI systems do not explain how they reach their decisions. This is called the black box problem. Doctors can’t always see the reasons behind an AI diagnosis. Without understanding, it’s hard to trust AI or spot errors. This makes patients and doctors nervous about relying on AI.

Key points about black box problem:

- AI decisions are often unclear or secret.

- Lack of explanation reduces trust in AI.

- Doctors can’t fully verify AI findings.

- Patients may feel unsure about AI-based diagnoses.

- Researchers are working on explainable AI.

Example:

An AI tool diagnosed pneumonia but couldn’t show doctors which signs it used. This made doctors hesitant to trust the result fully.

Over Reliance on AI

AI is a helpful tool but not perfect. Sometimes doctors might trust AI too much. They may skip their own checks or ignore other symptoms. Over reliance can lead to missed diagnoses or wrong treatments. Human judgment remains very important in healthcare.

Key points about over reliance:

- AI should support, not replace doctors.

- Blind trust in AI can cause missed problems.

- Doctors must double check AI results.

- Over reliance may ignore patient history or symptoms.

- Training helps doctors use AI wisely.

Example:

In some hospitals, doctors accept AI results without question which leads to wrong treatments when AI makes errors.

Data Privacy and Security

AI needs lots of patient data to work well. This data is very personal and sensitive. If it is not protected properly, it can be stolen or leaked. Data breaches can harm patients and reduce trust in healthcare. Hospitals must keep data safe with strong security.

Key points about privacy and security:

- Patient data must be stored securely.

- Cyberattacks can expose private health information.

- Data leaks harm patient trust and safety.

- Strong encryption and access controls are needed.

- Patients should know how their data is used.

Example:

A

If a major hospital faces a cyberattack, it will expose thousands of patient records, making patients worry about their privacy.

What Can Be Done to Reduce the Risks?

AI has amazing potential and the good news is we can take steps to make it safer and better for everyone. Here are some simple ways to reduce the risks and use AI smartly in medical diagnosis.

Train AI with More Diverse Patient Data

AI learns from examples. The more different kinds of patients it sees, the better it gets. This means including people of all ages, genders and backgrounds in the data. Diverse data helps AI work well for everyone, no matter who they are.

Make AI Decisions Easier to Explain

Doctors and patients want to know why AI says what it does. Making AI explain its decisions clearly builds trust. Researchers are working on “explainable AI” that shows the reasons behind every diagnosis. This will help doctors use AI with confidence.

Always Combine AI with Human Review

AI should help doctors not replace them. Human experts need to review AI results before making decisions. This team effort catches errors and ensures the best care. Remember, AI is a tool not the final boss.

Teach Doctors How to Use AI Tools Wisely

Doctors need training to understand AI’s strengths and limits. When doctors know how to use it properly, they can make better choices. Education helps the doctors to ask the right questions and check AI findings carefully.

Make Privacy a Top Priority

Patient data must be safe and private. Hospitals and AI companies must use strong security tools to protect this data. Patients should feel confident that their information is handled carefully and won’t be shared without permission.

Conclusion

AI is changing healthcare in amazing ways. It can help doctors find diseases faster and more accurately. But like any tool, AI comes with risks that we must understand and manage.

By knowing the hidden risks like bias, mistakes and privacy concerns we can use AI smarter and safer. Remember, AI works best when paired with skilled doctors and good data.

The future of medical diagnosis is bright but it depends on careful steps today. Are you ready to embrace AI wisely for better health?

Risks of AI in Medical Diagnosis – FAQs

Here are common queries about AI risks in diagnosis:

You have the right to ask your doctor directly if they are using AI tools during your visit. Many doctors will mention it, especially if AI helps them spot something important in your scans or tests. If you’re curious, simply ask: “Are you using any AI tools to help with my diagnosis?”

No, you shouldn’t because AI is designed to help doctors, not replace them. Think of it like a very advanced calculator that helps doctors catch things they might miss. Your doctor still makes the final decisions about your care and treatment.

You can always ask for a second opinion from another doctor just like with any diagnosis. Tell your doctor about your concerns and ask them to explain their reasoning. Remember, you have the right to question any medical decision and seek additional expert opinions.

Yes, in most countries, AI medical tools must be approved by health authorities before doctors can use them. In the US, the FDA (Food and Drug Administration) reviews and approves these tools. However, regulations are still catching up with the fast pace of AI development.

AI accuracy varies depending on the specific tool and medical condition being diagnosed. For some tasks like reading certain types of scans, AI can be as accurate as or sometimes more accurate than doctors. However, AI works best when combined with human expertise, not as a replacement.

The cost impact varies, AI might reduce some costs by speeding up diagnoses and catching diseases early. However, the technology itself can be expensive to develop and implement. Over time, AI could help to make healthcare more efficient and potentially more affordable.

Yes, you can discuss this with your healthcare provider and express your preferences. However, keep in mind that refusing AI assistance might mean missing out on potentially helpful diagnostic insights. It’s best to have an open conversation with your doctor about your concerns.

If an AI tool makes an error, your doctor should catch it during their review because of human experience. If a mistake does slip through, the same medical malpractice protections that apply to human errors would typically apply. Always report concerns to your healthcare provider immediately.

Healthcare providers must follow strict privacy laws like HIPAA and GDPR etc when using AI tools with your data. Your information should be encrypted and anonymized when possible. However, you can ask your doctor or hospital about their specific data protection policies.

No, AI is not expected to replace doctors entirely. Medicine involves complex human interactions, emotional support and nuanced decision-making that AI cannot replicate. AI will likely continue to serve as a powerful assistant tool, helping doctors provide better and faster care while doctors focus on patient relationships and complex medical decisions.

![Top 10 Best AI Dental Software Tools for Smarter Clinics [2025] - Post Thumbnail](https://techievisions.com/wp-content/uploads/best-dental-ai-software-100x67.webp)