7 Real Life AI Failures in Healthcare and What We Learned

Published: 3 Jul 2025

AI sounds like the future, right? Smart tools. Fast answers. Better care. But sometimes, things don’t go as planned. In healthcare, even small mistakes can lead to serious harm. And when AI fails, it doesn’t just mean a broken app, it can mean a wrong diagnosis, a missed disease or a risk to someone’s life. That’s why this topic matters a lot.

In this blog, we will walk through real examples where AI didn’t work in hospitals and clinics. We will keep things simple and clear. We’ll also explain why these failures happened and what we can learn from them.

Ready to see where things went wrong… and how we can do better? Let’s get started.

What Is AI in Healthcare?

AI stands for artificial intelligence. In easy words, it means machines that can “learn” from data and make smart decisions. They don’t just follow fixed rules but they get better over time as they see more examples.

In healthcare, AI is used to help doctors, nurses and hospitals to work faster and more accurately. But it’s still a tool and not a replacement for real people.

Let’s look at a few real-life examples you may have seen or heard about:

- Chatbots for Symptoms

Some apps ask you questions about your health and suggest what to do next. - AI Tools for X-rays

These tools can scan images and help in finding things like broken bones or lung problems, sometimes faster than humans. - Hospital Scheduling Assistants

AI can organize appointments, staff shifts and even patient flow in busy hospitals.

Sounds helpful, right? But as you will see in the next section… even smart machines can make big mistakes.

Why Does AI Fail in Healthcare?

AI has huge potential in healthcare. It can help doctors to make faster decisions, reduce hospital wait times and even detect diseases early. But despite all that promise, many AI tools have failed when used in real-world medical settings.

Let’s explore the major reasons why.

1. Bad or Biased Data

AI depends completely on the data it is trained on. Just like a student learning from books, AI learns by reading medical records, images and patient histories. If the training data is wrong, incomplete or biased, the AI will learn the wrong things.

For example, if the data mostly comes from one group of people, let’s say, white male patients then AI may not work well for women, children or people from other ethnic backgrounds. This is called data bias and it’s a common reason why AI makes unfair or unsafe decisions.

When the data is biased, the AI becomes biased too. And that leads to wrong diagnoses, missed symptoms and unequal treatment.

2. Not Tested in Real Hospitals

Many AI tools are tested in perfect lab settings, not in real busy hospitals. In real life, hospitals deal with noise, time pressure, missing records and human emotions. If an AI hasn’t been tested in those conditions, it can easily fail.

Just because something works in a controlled setting doesn’t mean it will work everywhere.

Example: An AI system for diagnosing eye disease worked well in clean labs. But when used in clinics in India, it gave poor results. Why? Because clinic lighting was bad, staff used the camera differently and the internet connection was slow, things the AI wasn’t trained for.

3. Poor Communication Between Humans and AI

AI can give answers but can it explain them? That’s the big problem.

Doctors don’t just need a “yes” or “no” from a machine. They need to know why the AI made that decision. If the AI can’t explain its reasoning clearly, doctors may ignore it or trust it too much without checking.

This issue is called lack of transparency or “black-box AI.” It means the decision process is hidden, even from the people using it.

Example: An AI tool used to detect heart problems flagged a patient as high-risk but didn’t show why. The doctor didn’t agree, so they ignored it. Later, the patient had a heart attack. If the AI had explained its reasoning, the doctor might’ve taken it seriously.

4. Strict Rules and Slow Approvals

Healthcare is not like other industries. You can’t just build an app and launch it overnight. Every tool must follow strict medical rules to make sure it’s safe and ethical. These include:

- FDA approvals for medical devices in the U.S.

- HIPAA laws for patient privacy

- Clinical trials to test effectiveness and safety

Many AI companies build fast and skip these steps. As a result, their tools get rejected or banned from use.

Example: Some companies trained AI tools on patient data without permission. That broke privacy laws and led to legal trouble, even if the tool worked.

Following rules may slow things down but skipping them can lead to failure or even lawsuits.

5. No Human Touch

AI is fast and smart but it’s not human.

It doesn’t understand tone, feelings or context. It can’t ask follow-up questions or offer emotional support. In healthcare, where every patient is unique and often scared, this matters a lot.

Example: A patient used a chatbot to ask about chest pain. The bot gave basic advice and said “No emergency.” But a real nurse would have noticed the patient’s nervous tone and asked more questions. That small human detail can save lives.

AI should assist, not replace. It needs to work alongside human care, not try to copy it.

7 Real Examples of AI Failures in Healthcare

AI is everywhere in healthcare now from hospitals and labs to smartphones and virtual doctor visits. It promises speed, accuracy and smart decision-making. But not all AI tools work the way they should.

Some systems have misdiagnosed patients. Others gave unsafe treatment suggestions. A few caused doctors to lose trust in technology altogether.

Let’s look at seven powerful examples where AI failed in real-life healthcare situations and what we can learn from them.

1. IBM Watson for Cancer Treatment

IBM Watson was once called the future of cancer care. It promised to help oncologists recommend the best treatments, fast. IBM claimed Watson could read thousands of research papers in seconds and give smart, personalized answers for every cancer case.

But studies showed, when doctors began using it, they noticed something alarming. Watson often suggested treatments that were either ineffective or outright dangerous. In one case, it recommended a drug that could’ve severely harmed a lung cancer patient.

Why did this happen? Watson’s system had been trained mostly on expert notes from a small group of doctors at Memorial Sloan Kettering Cancer Center in New York. It didn’t include enough real-world data from different countries, hospitals or patient types. It was also not tested broadly before being promoted globally.

Eventually, hospitals started pulling out. IBM sold Watson Health in 2022 after losing billions.

🔑 Key Takeaway:

AI must be trained on wide, diverse and real-world medical data. Narrow training leads to narrow and risky decisions.

2. Google’s Eye Disease Scanner in India

Google’s DeepMind built a tool to detect diabetic retinopathy, an eye disease that can lead to blindness. The AI was highly accurate in lab tests with results close to expert eye doctors.

But when the tool was introduced in clinics across India and Thailand, the real-world results were disappointing.

Nurses struggled with poor lighting, slow internet and inconsistent equipment. The AI couldn’t process blurry or low-quality images. In some cases, more than 20% of scans were rejected and patients had to return multiple times. This slowed down care and frustrated clinic staff.

The problem was not just with the AI’s logic but it was with the environment the AI was put into. The model hadn’t been prepared for the conditions of rural clinics or the pressure nurses face daily.

🔑 Key Takeaway:

An AI that works in the lab may fail in the field. Always test AI tools in the same places they’ll actually be used.

3. Babylon Health Chatbot — Gender-Biased Advice

Babylon Health created a symptom-checker chatbot used by thousands of patients in the UK. It was meant to give fast advice when people felt sick, based on the symptoms they typed in.

But tests showed a shocking flaw.

When a man entered chest pain, the bot said he might be having a heart attack. When a woman entered the same symptoms, it suggested a panic attack. The bot gave different advice even though the symptoms were identical.

Why? The training data was biased. It was built on general medical information but didn’t include enough examples of how heart attacks appear differently in women.

This created real risk. Women might delay care based on wrong advice and doctors could lose trust in the tool.

🔑 Key Takeaway:

AI must be trained on diverse patient profiles. Gender, age and background all affect medical symptoms and safety.

4. COVID-19 AI Prediction Models

When COVID-19 hit the world in 2020, researchers rushed to build AI models to predict which patients would get sick, need ICU care or which would die.

Hospitals, governments and even tech companies began relying on these tools.

But a 2020 study in the British Medical Journal (BMJ) reviewed over 100 COVID-19 AI models and found most were poorly designed and dangerously biased. Many used small and incomplete datasets. Others were trained only on patients from one country or hospital.

As a result, these models gave wrong predictions, causing hospitals to overprepare or underprepare for patient surges.

In a time of global crisis, faulty AI added confusion instead of clarity.

🔑 Key Takeaway:

In emergencies, AI must be transparent, well-tested, and based on fresh, diverse data. Bad models can make crises worse.

5. Epic’s AI Sepsis Alert System

Epic Systems is a giant in hospital software. Their sepsis-prediction AI was supposed to catch early signs of sepsis, a deadly condition that spreads quickly and is hard to spot.

But a major study found that the system missed two out of every three real sepsis cases. Even worse, it gave hundreds of false alarms every day.

Doctors got tired of the warnings and started ignoring them altogether. Some said the alerts created “alarm fatigue,” where they could not tell real danger from machine noise.

This happened because Epic’s model was trained on billing codes and historical hospital data, not real-time clinical signs. It also was not shared openly, so researchers couldn’t spot the flaws early.

🔑 Key Takeaway:

An AI alert system must be both accurate and useful. If it cries wolf too often, it becomes invisible.

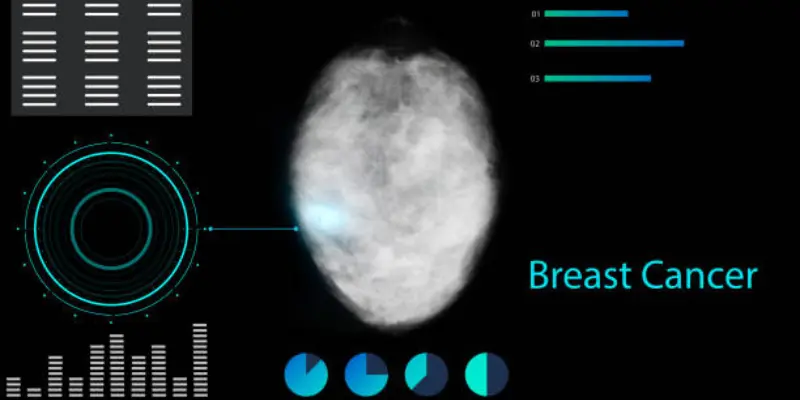

6. Breast Cancer Detection AI — Racial Bias in Practice

In the U.S., researchers developed an AI tool to detect breast cancer from mammograms. It showed high accuracy in early tests and was celebrated as a major step forward.

But later studies revealed a disturbing problem.

The model had been trained mostly on images from White women. When used on Black women, it made 50% more false-positive errors. That meant more unnecessary biopsies, stress and misdiagnoses.

Why did this happen? The AI wasn’t given diverse training images. Breast density and imaging equipment vary by race and location and the model had not seen enough examples of that variation.

🔑 Key Takeaway:

AI must work equally well for all groups. If it’s not tested for racial fairness, it can worsen existing healthcare gaps.

7. Stanford’s COVID Chest X-ray Tool — Learning the Wrong Thing

Stanford researchers created an AI to detect COVID-19 from chest X-rays. It claimed to be accurate and fast, a potential lifesaver for overworked hospitals.

But when tested more closely, the AI was learning shortcuts.

Instead of looking at the lungs, it noticed hospital logos, image brightness or text markings that appeared on scans from COVID-positive hospitals. The model wasn’t detecting COVID at all, it was just learning to match hospital-specific patterns.

When given X-rays from new hospitals, the AI failed. Its “intelligence” was just a clever trick.

🔑 Key Takeaway:

AI can fool even experts by finding shortcuts in data. Models must be stress-tested to make sure they’re solving the right problem.

The Real Cost of These Mistakes

AI failures in healthcare are not just technical glitches. They have real consequences for patients, doctors and entire healthcare systems.

Let’s take a moment to think about what really happens when an AI tool gets it wrong.

1. A Wrong Diagnosis Can Mean a Missed Life-Saving Treatment

Imagine this:

A young woman walks into a clinic with chest pain. She uses an AI chatbot and it tells her it’s just anxiety. She goes home. Later that night, she has a heart attack. When AI misreads symptoms or misses danger signs, patients lose precious time. And in healthcare, time often means life.

No one wants to imagine a tool meant to help… making things worse. But that’s exactly what happens when AI is rushed, untested or biased.

2. Trust Is Lost Between Patients and Doctors

Doctors are trained to think critically. But when AI tools constantly give false alarms or make unclear recommendations, trust breaks down. Doctors may start to ignore alerts, skip features or stop using the tool altogether.

Worse, patients might feel confused. They wonder, “Should I listen to my doctor or the AI result I just saw?”

When people can’t trust the technology or the person using it, everyone loses.

3. Hospitals and Tech Companies Waste Billions

Let’s not forget the money side. IBM Watson Health cost over $4 billion and it still failed. Hospitals that bought the system had to abandon it, retrain staff and start over. Similar failures with Epic’s sepsis alert, Babylon’s chatbot and COVID models cost time, resources and trust.

AI tools are expensive to develop. But if they’re not built right from the start, like if they skip healthcare AI ethics, testing and fairness, it may lead to huge financial loss and public embarrassment.

Some companies never recover.

How to Prevent AI Failures in Healthcare

We have seen how powerful and dangerous AI in healthcare can be when things go wrong. But it doesn’t have to be this way.

AI isn’t the enemy. The problem is how we build it, how we train it and how we use it. If we want to create tools that truly help patients and doctors, we need to follow some smart steps.

Let’s break it down.

1. Use Clean, Diverse and Fair Data

AI only knows what we teach it. If we feed it data from one country, one gender or one race, it won’t perform well for everyone else.

That’s why AI models must be trained on:

- Data from different regions and hospitals

- Patients of all ages, races and genders

- Updated and well-labeled medical records

2. Test AI in Real-World Settings

Just because an AI works well in a lab doesn’t mean it will work in a busy emergency room. Real hospitals are loud, messy and fast. The Internet might be slow. The lighting might be poor. Nurses might be stressed.

So, test your AI tool in the same setting where it will actually be used. And test it with real medical teams, not just tech staff.

Example:

Google’s eye-scanning AI failed in clinics due to poor lighting and workflow mismatch. Things that didn’t exist in the lab.

3. Keep Humans in Control

AI should never replace doctors. It should support them like a smart assistant.

Doctors need tools that explain why they made a decision, not just what the decision is. That’s called explainability. Without it, doctors may either ignore the AI or trust it blindly. Both are dangerous.

Example:

If an AI says “Don’t worry,” but doesn’t explain why, a doctor may overlook something serious or not know what to do next.

4. Balance Accuracy with Safety

A good AI doesn’t just get the right answer but it also avoids creating panic or false hope.

That means we need to reduce:

- False positives (telling healthy patients they’re sick)

- False negatives (missing real problems)

Both can hurt trust and waste resources..

🔑 Tip: Tune your model carefully. In healthcare, it’s not just about speed but it’s about trust.

5. Be Transparent and Open

Many healthcare AI tools are black boxes and no one knows how they work. That makes it hard for researchers, hospitals or regulators to test them.

Being secretive might protect your software but it hurts patients.

Example:

During COVID-19, many prediction models failed because their creators didn’t share how the tools were built. No one could double-check their methods.

6. Update Regularly and Monitor Performance

Medicine changes fast. New diseases appear. New treatments launch. Patient needs shift.

If your AI is still using data from five years ago, it’s already behind.

You also need to monitor how the AI performs over time. Is it still accurate? Is it making weird mistakes? You can’t just “build it and forget it.”

Example:

An AI trained before the pandemic might not recognize post-COVID symptoms or new patient patterns.

7. Design With the End User in Mind

AI must fit into the daily routines of the doctors, nurses and staff who use it. If it’s hard to use, too slow or interrupts their work, it will be ignored no matter how brilliant it is.

Example:

Many doctors ignore alerts from tools that are buried in confusing dashboards or have clunky interfaces.

Should We Fear or Fix AI in Healthcare?

After reading all these failures, you might be wondering: Is AI really worth the risk?

It’s a fair question. But here’s the truth: AI itself is not the problem. The problem is how we build it, train it and trust it without thinking things through.

Yes, AI has failed. But it has also saved lives.

Some AI tools can detect cancer early, read scans faster, spot drug interactions and even help during surgeries. When done right, AI becomes a powerful teammate for doctors and nurses.

But when done wrong, it becomes a dangerous black box. So, should we fear AI in healthcare? No. We should fix it.

We need to:

- Build AI that’s tested, trusted and transparent

- Make sure it helps all types of patients not just a few

- Keep humans in charge — always

Let’s not throw away the tool. Let’s improve how we use it.

Conclusion

AI can do great things in healthcare like faster tests, better predictions and smarter tools. But when it fails, the cost is high: wrong treatments, broken trust and wasted money.

We’ve seen how and why these failures happen. The solution is clear:

- Use fair, real-world data

- Test tools in real settings

- Keep humans in control

AI isn’t the problem. Poor planning is. Let’s build AI that helps safely, fairly and for everyone. Because in healthcare, there’s no room for shortcuts.

Common Queries Related to Healthcare AI Failures

Here are frequently asked questions about AI Failures in healthcare:

No, AI doesn’t make final decisions by itself. It gives suggestions based on data. Doctors still make the final call. AI is like a smart assistant not a replacement for medical professionals.

Labs are controlled and clean but real hospitals are busy, noisy and unpredictable. AI may struggle with poor lighting, missing data or unusual cases. If it’s not tested in the real world, it won’t perform well in real situations.

Safe AI tools are approved by health authorities like the FDA. They’re tested, reviewed and updated regularly. Always ask if a tool is clinically validated before using it.

Yes, if the training data is biased, the AI can be too. For example, it may not work well for certain races, genders or age groups. That’s why diverse and fair data is so important.

Good AI supports doctors, explains its decisions and improves over time. Bad AI gives confusing results, misses important signs or doesn’t work for everyone. The difference often comes down to data quality, testing and design.

They can be helpful for basic guidance but they’re not perfect. Use them for early insight, but always follow up with a real doctor, especially for serious symptoms. AI should never replace professional medical advice.

Yes! Some AI tools help in detecting cancer early, analyze X-rays or manage hospital workflows more efficiently. These tools work because they were built with good data, strong testing and human input.

They should use tools that are tested in real settings, involve doctors in design and keep monitoring performance. Training staff to use AI correctly is also key. AI must fit into the hospital’s daily workflow.

Doctors stay in control. They use AI to get support, spot patterns or save time but they still decide what care is given. Think of AI as a second opinion not the final word.

Talk to a real healthcare provider immediately. Report the issue to the app or hospital using the tool. Mistakes can happen and your feedback can help improve the system for others.