What is the Impact of AI in Diagnostic Imaging?

Published: 30 Apr 2025

For many healthcare professionals, interpreting medical images can be time-consuming and prone to errors. AI in diagnostic imaging is transforming the healthcare industry by enabling faster and more accurate diagnoses. A study by researchers at Johns Hopkins Medicine states that AI can reduce human error in reading medical images, increasing diagnostic accuracy by up to 30%. With AI tools like image recognition and analysis, healthcare providers can detect diseases like cancer earlier, thereby giving patients a better chance at treatment and recovery.

AI in diagnostic imaging

AI in diagnostic imaging refers to the use of artificial intelligence tools to help doctors understand medical images like X-rays, CT scans, MRIs and ultrasounds. These tools use smart computer programs that learn from large sets of medical data. They can find patterns, highlight areas of concern and sometimes even suggest a possible diagnosis. AI makes the process faster, more accurate and less tiring for doctors. It does not mean that it will replace doctors but it supports them in making better decisions.

Key points:

- AI looks for patterns in medical images that may be hard to spot for doctors.

- It uses machine learning to get smarter with more data.

- It helps doctors detect problems early, like tumors or fractures.

- It speeds up the diagnosis process in busy hospitals.

- It reduces the chance of human error during image analysis.

Also Read: Top 10 Radiology AI Companies in Healthcare

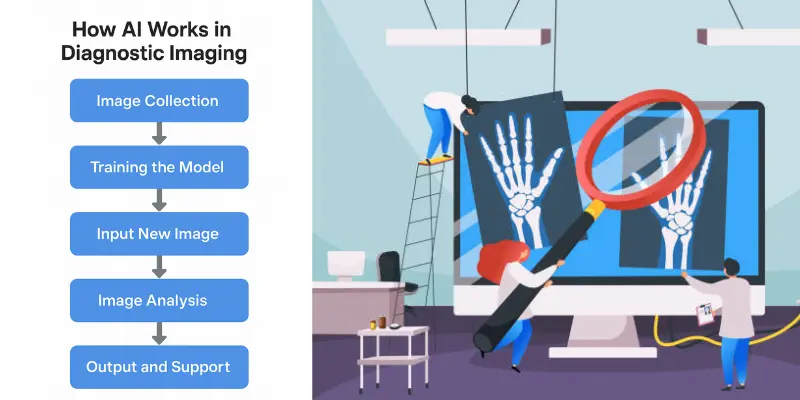

How AI Works in diagnostic imaging

AI in diagnostic imaging works by using smart computer programs called algorithms that learn from thousands of medical images. These images include X-rays, CT scans, MRIs and more. The system is trained to recognize patterns and features like the shape of a tumor or the shadow of a broken bone.

Here’s how AI works in diagnostic imaging, step by step:

- Image Collection: Thousands of labeled medical images like CT scans or MRIs are collected and used to teach the AI model.

- Training the Model: The AI learns to spot signs of diseases by studying patterns in the images.

- Input New Image: A doctor uploads a new image like a chest X-ray into the AI system.

- Image Analysis: The AI scans the image, compares it to what it has learned and finds any areas that look abnormal.

- Output and Support: The AI gives results such as marked areas or diagnosis suggestions, to help the doctor to make a final decision.

Common Misconceptions And the Truth

Let’s clear up what people often get wrong:

- Misconception 1: AI replaces doctors.

➤ Truth: AI helps doctors but it doesn’t replace them. It’s like an assistant that points things out. - Misconception 2: AI is always right.

➤ Truth: AI can make mistakes too. That’s why doctors always double-check its results. - Misconception 3: AI understands the body.

➤ Truth: AI doesn’t “understand” like humans. It spots patterns and doesn’t “feel” or “think.” - Misconception 4: AI works instantly.

➤ Truth: It needs training, updates and testing before it works well. - Misconception 5: AI is fully automatic.

➤ Truth: Humans still manage the process and approve the final diagnosis.

Quick Example:

Let’s say a radiologist is checking a brain MRI. The AI spots a tiny area that looks like a tumor and marks it. The doctor sees the mark, agrees with the finding and starts treatment earlier than they might have otherwise. That’s the power of working together—AI plus doctor.

You May Want to Know: Diagnostic AI vs Predictive AI

Types of AI in Diagnostic Imaging

AI in diagnostic imaging comes in different forms. Each type plays a special role in helping doctors understand medical images better and faster.

- Rule-Based AI– Follows fixed “if-then” instructions to detect known patterns.

- Machine Learning (ML)– Learns from past images to improve over time.

- Deep Learning (DL)– Uses layered neural networks to spot complex features in images.

- Convolutional Neural Networks (CNNs)– Special deep learning models made for analyzing images.

- Natural Language Processing (NLP)– Helps in turning doctor notes and image reports into useful data.

- Hybrid AI Systems– Combines different AI types to improve accuracy and performance.

- Cloud-Based AI– Works online to process scans quickly without needing special hardware.

Evolution of AI in Diagnostic Imaging: A Simple Timeline

AI in diagnostic imaging didn’t appear overnight. It started with small steps and grew with better technology and smarter tools. Let’s walk through the journey.

1. Early 1990s– Rule-Based Systems

- These early systems followed if-then rules, like:

➤ If a white spot is this shape, it might be a tumor. - They were slow and needed human help to work.

- Not very accurate but a big first step!

2. 2000s– Machine Learning Begins

- Computers started learning from data instead of following rules.

- Doctors gave the AI many labeled images to learn from.

- The more images it saw, the better it got at spotting patterns.

3. 2010s– Deep Learning and Neural Networks

- Deep learning made AI smarter.

- AI could now handle very complex images like MRIs and CT scans.

- Systems like Google’s DeepMind could detect diseases as well as top doctors.

- Radiology tools began using this AI to support real hospital work.

4. 2020s– Widespread Clinical and Cloud-Based AI

- AI tools became faster and easier to access (often cloud-based).

- Hospitals started using AI regularly for chest X-rays, mammograms and brain scans.

- AI began helping with triage (deciding which patients need urgent care first).

- Tools now work across devices, hospitals and even countries.

Now and Future– Hybrid AI with Human-AI Teams

- Today’s AI doesn’t work alone—it partners with radiologists.

- AI suggests; doctors decide.

- In the future, we may see AI giving full reports, guiding surgeries or helping in remote care areas.

Example:

In 2005, an AI might only check a chest X-ray for pneumonia using fixed rules. Today, AI can check for over 20 conditions in seconds and highlight which ones need urgent attention.

Clinical Applications of AI in Diagnostic Imaging

AI is now part of everyday work in many hospitals. It helps doctors read images faster, find diseases earlier and even guide treatment choices. Let’s look at how AI is being used in real clinical settings.

Detecting Diseases from X-rays and CT Scans

AI can quickly scan chest X-rays, CT scans or MRIs to find signs of disease. It spots things like lung infections, fractures or tumors—often faster than a human eye. This helps doctors to treat patients earlier and avoid missing critical problems.

- Spots pneumonia, COVID-19 or TB in chest scans

- Detects small tumors or nodules doctors might miss

- Flags urgent cases for quick review

Brain Imaging and Stroke Detection

AI helps in emergencies by reading brain scans quickly. It looks for strokes, bleeding or tumors in CT or MRI brain images and sends alerts so doctors can act fast.

- Detects early signs of stroke within minutes

- Helps spot brain tumors or bleeding

- Guides decisions for urgent care

Bone Fracture and Injury Analysis

In busy emergency rooms, AI assists in reading bone X-rays. It highlights breaks, dislocations or joint issues, saving time for doctors and patients.

- Finds tiny fractures that are hard to see

- Helps doctors to check sports injuries faster

- Speeds up care for trauma cases

Cancer Detection and Monitoring

AI is being used to find different types of cancers like breast, lung or colon cancer at earlier stages. It also tracks how a tumor changes over time which helps doctors to see if treatment is working.

- Identifies abnormal growths in mammograms or scans

- Compares past and present images for progress

- Suggests if further tests are needed

Radiology Report Generation

AI doesn’t just look at pictures. It can help write reports too. It reads the scan, compares it to patterns and drafts a report that a radiologist reviews and finalizes.

- Saves time by pre-filling standard details

- Reduces errors in written reports

- Frees up time for radiologists to focus on patients

How AI in Diagnostic Imaging Differs in Developed vs Developing Countries

AI in diagnostic imaging is helping doctors everywhere but it works a bit differently depending on the country. The needs, resources and goals can vary a lot between developed and developing countries. Let’s break it down.

In Developed Countries

In places like the USA, UK or Germany, hospitals have better tools and faster internet. They use AI to save time, reduce doctor workload and increase accuracy.

- Advanced machines work with AI to scan and analyze images in seconds.

- AI helps radiologists focus on hard cases by filtering out normal scans.

- Hospitals use cloud-based AI systems that update regularly with new data.

Example:

A hospital in New York might use AI to compare thousands of patient scans and find early signs of cancer that even experts can’t judge.

In Developing Countries

In places like India, Nigeria or Bangladesh, AI is used to fill gaps where there aren’t enough doctors or equipment. It makes healthcare more affordable and reachable.

- AI tools are used on mobile devices or low-cost systems.

- It helps rural clinics where expert radiologists are not available.

- Some AI apps work offline and are trained on local health data.

Example:

A small clinic in a remote area might use an AI-powered app to scan chest X-rays and check for tuberculosis without needing a specialist.

Key Differences at a Glance

| Feature | Developed Countries | Developing Countries |

| Main Use | Speed, accuracy, support for doctors | Access, affordability, coverage |

| Technology | Cloud-based, integrated with hospital systems | Mobile-first, offline options |

| Focus | Efficiency and automation | Basic diagnosis and outreach |

In short, AI in diagnostic imaging is flexible. In developed countries, it boosts performance. In developing countries, it becomes a lifesaver by reaching people who usually don’t get expert care.

Advantages of AI in Diagnostic Imaging

Here are pros of AI in Diagnostic Imaging with respect to professionals, researchers and students.

| Benefits |

|---|

For Work (Doctors & Radiologists)AI helps professionals do their jobs faster and better.

For Study (Medical Students & Trainees)AI becomes a smart learning tool.

For Research (Scientists & Analysts)AI helps researchers to find new medical patterns.

|

Disadvantages of AI in Diagnostic Imaging

Here are cons of AI in Diagnostic Imaging with respect to professionals, researchers and students.

| Drawbacks |

|---|

For Work (Doctors & Radiologists)AI can’t replace human judgment.

For Study (Medical Students & Trainees)Over Reliance on AI can hurt learning.

For Research (Scientists & Analysts)AI has technical and data limits.

|

Conclusion

AI in diagnostic imaging is changing the way we understand and approach healthcare. With its ability to quickly analyze images and spot problems that might be missed by the human eye, it offers hope for more accurate diagnoses and better patient outcomes. If you are curious about how this technology is reshaping the future of healthcare, now is the time to dive deeper into this exciting field. The potential is huge and the more we explore, the closer we get to transforming healthcare for the better. Keep learning, stay curious and be part of the AI-driven solutions in healthcare!

Related Queries AI in Diagnostic Imaging

Here are frequently asked questions about role of AI in Diagnostic Imaging:

AI specialists need a combination of computer science and healthcare knowledge. Most have degrees in computer science, data science or biomedical engineering with specialized training in machine learning. Many teams also include medical professionals who provide clinical expertise to guide AI development.

Implementation costs vary widely from $50,000 for basic systems to several million dollars for comprehensive hospital-wide solutions. The expense covers software licenses, hardware upgrades, integration with existing systems and staff training. Many vendors now offer subscription models to make adoption more accessible for smaller facilities.

Yes, privacy is a major concern when AI systems process sensitive medical data. Patient images must be properly anonymized before being used for AI training or analysis. Healthcare facilities must comply with regulations like HIPAA and ensure secure data storage and transmission.

Training a medical imaging AI system typically takes several months to over a year depending on complexity. The process requires thousands of correctly labeled images representing diverse patient populations. After initial training, systems continue to improve through ongoing updates with new data.

In some cases, AI can detect subtle patterns associated with early disease that aren’t yet causing symptoms. Systems have demonstrated the ability to identify early signs of conditions like lung cancer or Alzheimer’s disease before they would typically be diagnosed. However, these early detections still require confirmation through additional testing.

When AI makes an incorrect assessment, the supervising healthcare professional should catch the error during their review. Medical AI tools are considered decision support systems rather than autonomous diagnostic tools, so physicians maintain legal responsibility for diagnoses. This is why the “human-in-the-loop” approach remains standard practice in healthcare AI.

Rather than replacing radiologists, AI is transforming their role to focus more on complex cases and patient interaction. Studies show that radiologists who work with AI achieve better results than either humans or AI working alone. The field is evolving toward a collaborative model where AI handles routine screenings while radiologists provide expertise on difficult cases.

Doctors verify AI interpretations by comparing them with their own professional judgment and patient history. Medical facilities also regularly audit AI performance against known outcomes and have quality assurance processes. Many systems provide confidence scores and visual explanations that help doctors understand why the AI reached a particular conclusion.

Yes, patients typically maintain the right to request that their images be interpreted by a human specialist without AI assistance. Healthcare providers generally have protocols for accommodating such requests while explaining the potential benefits of AI-assisted diagnosis. The final diagnostic decision always comes from a qualified healthcare professional regardless of whether AI is used in the process.

Medical imaging AI is regulated by agencies like the FDA in the US and similar bodies worldwide. These tools must undergo clinical validation studies proving their safety and effectiveness before approval. Ongoing monitoring tracks performance in real-world settings, with requirements for reporting adverse events or unexpected results.